13 Best Data Analytics Bootcamps – Cost, Curriculum, and Reviews

Data analytics is one of the hottest career paths today. The market is booming, growing from \$82.23 billion in 2025 to an expected \$402.70 billion by 2032.

That growth means opportunities everywhere. But it also means bootcamps are popping up left and right to fill that demand, and frankly, not all of them are worth your time or money. It's tough to know which data analytics programs actually deliver value.

Not every bootcamp fits every learner, and not every “data program” is worth your time or money. Your background, goals, and learning style all matter when choosing the right path.

This guide is designed to cut through the noise. We’ll highlight the 13 best online data analytics bootcamps, break down costs, curriculum, and reviews, and help you find a program that can truly launch your career.

Why These Online Data Analytics Bootcamps Matter

Bootcamps are valuable because they focus on hands-on, practical skills from day one. Instead of learning theory in a vacuum, you work directly with the tools that data professionals rely on.

Most top programs teach Python, SQL, Excel, Tableau, and statistics through real datasets and guided projects. Many include mentorship, portfolio-building, career coaching, or certification prep.

The field is evolving quickly. Some bootcamps stay current and offer strong guidance, while others feel outdated or too surface-level. Choosing a well-built program ensures you learn in a structured way and develop skills that match what companies expect today.

What Will You Learn in a Data Analytics Bootcamp?

At its core, data analytics is growing because companies want clear, reliable insights. They need people who can clean data, write SQL queries, build dashboards, and explain results in a simple way.

A good data analytics bootcamp teaches you the technical and analytical skills you’ll need to turn raw data into clear, actionable insights.

The exact topics may vary by program, but most bootcamps cover these key areas:

| Topic | What You'll Learn |

|---|---|

| Data cleaning and preparation | How to collect, organize, and clean datasets by handling missing values, fixing errors, and formatting data for analysis. |

| Programming for analysis | Learn to use Python or R, along with libraries like Pandas, NumPy, and Matplotlib, to manipulate and visualize data. |

| Databases and SQL | Write SQL queries to extract, filter, and join data from relational databases, one of the most in-demand data skills. |

| Statistics and data interpretation | Understand descriptive and inferential statistics, regression, probability, and hypothesis testing to make data-driven decisions. |

| Data visualization and reporting | Use tools like Tableau, Power BI, or Microsoft Excel to build dashboards and communicate insights clearly. |

| Business context and problem-solving | Learn to frame business questions, connect data insights to goals, and present findings to non-technical audiences. |

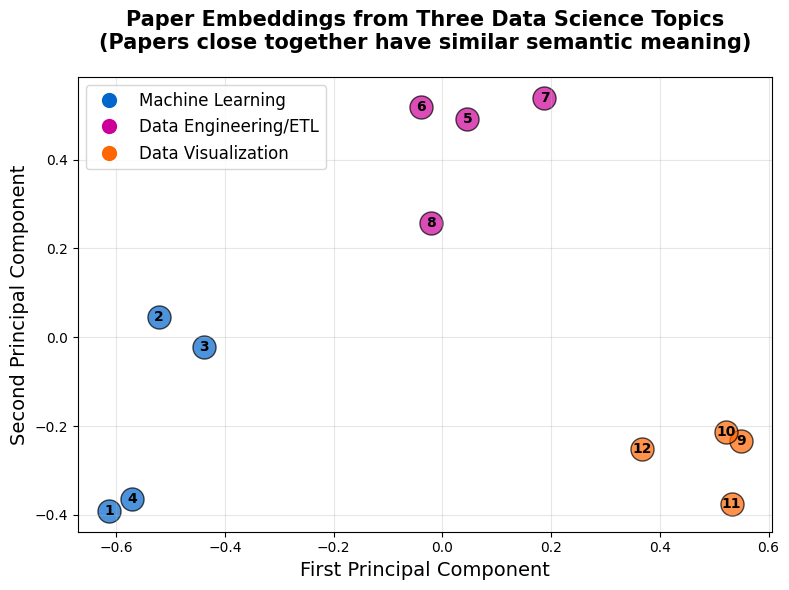

Some programs expand into machine learning, big data, or AI-powered analytics to help you stay ahead of new trends.

This guide focuses on the best online data analytics bootcamps, since they offer more flexibility and typically lower costs than in-person bootcamps.

1. Dataquest

Price: Free to start; paid plans available for full access (\$49 monthly and \$588 annual).

Duration: ~8 months (recommended 5 hours per week).

Format: Online, self-paced.

Rating: 4.79/5

Key Features:

- Project-based learning with real data

- 27 interactive courses and 18 guided projects

- Learn Python, SQL, and statistics directly in the browser

- Clear, structured progression for beginners

- Portfolio-focused, challenge-based lessons

Dataquest’s Data Analyst in Python path isn’t a traditional bootcamp, but it delivers similar results for a much lower price.

You’ll learn by writing Python and SQL directly in your browser and using libraries like Pandas, Matplotlib, and NumPy. The lessons show you how to prepare datasets, write queries, and build clear visuals step by step.

As you move through the path, you practice web scraping, work with APIs, and learn basic probability and statistics.

Each topic includes hands-on coding tasks, so you apply every concept right away instead of reading long theory sections.

You also complete projects that simulate real workplace problems. These take you through cleaning, analyzing, and visualizing data from start to finish. By the end, you have practical experience across the full analysis process and a portfolio of projects to show your work to prospective employers.

| Pros | Cons |

|---|---|

| ✅ Practical, hands-on learning directly in the browser | ❌ Text-based lessons might not suit every learning style |

| ✅ Beginner-friendly and structured for self-paced study | ❌ Some sections can feel introductory for experienced learners |

| ✅ Affordable compared to traditional bootcamps | ❌ Limited live interaction or instructor time |

| ✅ Helps you build a portfolio to showcase your skills | ❌ Advanced learners may want deeper coverage in some areas |

“Dataquest starts at the most basic level, so a beginner can understand the concepts. I tried learning to code before, using Codecademy and Coursera. I struggled because I had no background in coding, and I was spending a lot of time Googling. Dataquest helped me actually learn.” - Aaron Melton, Business Analyst at Aditi Consulting.

“Dataquest's platform is amazing. Cannot stress this enough, it's nice. There are a lot of guided exercises, as well as Jupyter Notebooks for further development. I have learned a lot in my month with Dataquest and look forward to completing it!” - Enrique Matta-Rodriguez.

2. CareerFoundry

![]()

Price: Around \$7,900 (payment plans available from roughly \$740/month).

Duration: 6–10 months (flexible pace, approx. 15–40 hours per week).

Format: 100% online, self-paced.

Rating: 4.66/5

Key Features:

- Dual mentorship (mentor + tutor)

- Hands-on, project-based curriculum

- Specialization in Data Visualization or Machine Learning

- Career support and job preparation course

- Active global alumni network

CareerFoundry’s Data Analytics Program teaches the essential skills for working with data.

You’ll learn how to clean, analyze, and visualize information using Excel, SQL, and Python. The lessons also introduce key Python libraries like Pandas and Matplotlib, so you can work with real datasets and build clear visuals.

The program is divided into three parts: Intro to Data Analytics, Data Immersion, and Data Specialization. In the final stage, you choose a track in either Data Visualization or Machine Learning, depending on your interests and career goals.

Each part ends with a project that you add to your portfolio. Mentors and tutors review your work and give feedback, making it easier to understand how these skills apply in real situations.

| Pros | Cons |

|---|---|

| ✅ Clear structure and portfolio-based learning | ❌ Mentor quality can be inconsistent |

| ✅ Good for beginners switching careers | ❌ Some materials feel outdated |

| ✅ Flexible study pace with steady feedback | ❌ Job guarantee has strict conditions |

| ✅ Supportive community and active alumni | ❌ Occasional slow responses from support team |

“The Data Analysis bootcamp offered by CareerFoundry will guide you through all the topics, but lets you learn at your own pace, which is great for people who have a full-time job or for those who want to dedicate 100% to the program. Tutors and Mentors are available either way, and are willing to assist you when needed.” - Jaime Suarez.

“I have completed the Data Analytics bootcamp and within a month I have secured a new position as data analyst! I believe the course gives you a very solid foundation to build off of.” - Bethany R.

3. Fullstack Academy

Price: \$6,995 upfront (discounted from \$9,995); \$7,995 in installments; \$8,995 with a loan option.

Duration: 10 weeks full-time or 26 weeks part-time.

Format: Live online.

Rating: 4.79/5

Key Features:

- Live, instructor-led format

- Tableau certification prep included

- GenAI lessons for analytics tasks

- Capstone project with real datasets

Fullstack Academy’s Data Analytics Bootcamp teaches the practical skills needed for entry-level analyst roles.

You’ll learn Excel, SQL, Python, Tableau, ETL workflows, and GenAI tools that support data exploration and automation.

The curriculum covers business analytics, data cleaning, visualization, and applied Python for analysis.

You can study full-time for 10 weeks or join the part-time 26-week schedule. Both formats include live instruction, guided workshops, and team projects.

Throughout the bootcamp, you’ll work with tools like Jupyter Notebook, Tableau, AWS Glue, and ChatGPT while practicing real analyst tasks.

The program ends with a capstone project you can add to your portfolio. You also receive job search support, including resume help, interview practice, and guidance from career coaches for up to a year.

It’s a good fit if you prefer structured, instructor-led learning and want a clear path to an entry-level data role.

| Pros | Cons |

|---|---|

| ✅ Strong live, instructor-led format | ❌ Fixed class times may not suit everyone |

| ✅ Clear full-time and part-time schedules | ❌ Some students mention occasional admin or billing issues |

| ✅ Tableau certification prep included | ❌ Job-search results can vary |

| ✅ Capstone project with real business data | |

| ✅ Career coaching after graduation |

“The instructors are knowledgeable and the lead instructor imparted helpful advice from their extensive professional experiences. The student success manager and career coach were empathetic listeners and overall, kind people. I felt supported by the team in my education and post-graduation job search.” - Elaine.

“At first, I was anxious seeing the program, Tableau, SQL, all these things I wasn't very familiar with. Then going through the program and the way it was structured, it was just amazing. I got to learn all these new tools, and it wasn't very hard. Once I studied and applied myself, with the help of Dennis and the instructors and you guys, it was just amazing.” - Kirubel Hirpassa.

4. Coding Temple

Price: \$6,000 upfront (discounted from \$10,000); ~\$250–\$280/month installment plan; \$9,000 deferred payment.

Duration: About 4 months.

Format: Live online + asynchronous.

Rating: 4.77/5

Key Features:

- Daily live sessions and flexible self-paced content

- LaunchPad access with 5,000 real-world projects

- Lifetime career support

- Job guarantee (refund if no job in 9 months)

Coding Temple’s Data Analytics Bootcamp teaches the core tools used in today’s analyst roles, including Excel, SQL, Python, R, Tableau, and introductory machine learning.

Each module builds skills in areas like data analysis, visualization, and database management.

The program combines live instruction with hands-on exercises so you can practice real analyst workflows. Over the four-month curriculum, you’ll complete short quizzes, guided labs, and projects using tools such as Jupyter Notebook, PostgreSQL, Pandas, NumPy, and Tableau.

You’ll finish the bootcamp with a capstone project and a polished portfolio. The school also provides ongoing career support, including resume reviews, interview prep, and technical coaching.

This program is ideal if you want structure, accountability, and substantial practice with real-world datasets.

| Pros | Cons |

|---|---|

| ✅ Supportive instructors who explain concepts clearly | ❌ Fast pace can feel intense for beginners |

| ✅ Good mix of live classes and self-paced study | ❌ Job-guarantee terms can be strict |

| ✅ Strong emphasis on real projects and practical tools | ❌ Some topics could use a bit more depth |

| ✅ Helpful career support and interview coaching | ❌ Can be challenging to balance with a full-time job |

| ✅ Smaller class sizes make it easier to get help |

"Enrolling in Coding Temple's Data Analytics program was a game-changer for me. The curriculum is not just about the basics; it's a deep dive that equips you with skills that are seriously competitive in the job market." - Ann C.

“The support and guidance I received were beyond anything I expected. Every staff member was encouraging, patient, and always willing to help, no matter how small the question.” - Neha Patel.

5. Springboard x Microsoft

Price: \$8,900 upfront (discounted from \$11,300); \$1,798/month (month-to-month, max 6 months); deferred tuition \$253–\$475/month; loan financing available.

Duration: 6 months, part-time.

Format: 100% online and self-paced with weekly mentorship.

Rating: 4.6/5

Key Features:

- Microsoft partnership

- Weekly 1:1 mentorship

- 33 mini-projects plus two capstones

- New AI for Data Professionals units

- Job guarantee with refund (terms apply)

Springboard's Data Analytics Bootcamp teaches the core tools used in analyst roles, with strong guidance and support throughout.

You’ll learn Excel, SQL, Python, Tableau, and structured problem-solving, applying each skill through short lessons and hands-on exercises.

The six-month program includes regular mentor calls, project work, and career development tasks. You’ll complete numerous exercises and two capstone projects that demonstrate end-to-end analysis skills.

You also learn how to use data to make recommendations, create clear visualizations, and present insights effectively.

The bootcamp concludes with a complete portfolio and job search support, including career coaching, mock interviews, networking guidance, and job search strategies.

It’s an ideal choice if you want a flexible schedule, consistent mentorship, and the added confidence of a job guarantee.

| Pros | Cons |

|---|---|

| ✅ Strong mentorship structure with regular 1:1 calls | ❌ Self-paced format requires steady discipline |

| ✅ Clear project workflow with 33 mini-projects and 2 capstones | ❌ Mentor quality can vary |

| ✅ Useful strategic-thinking frameworks like hypothesis trees | ❌ Less real-time instruction than fully live bootcamps |

| ✅ Career coaching that focuses on networking and job strategy | ❌ Job-guarantee eligibility has strict requirements |

| ✅ Microsoft partnership and AI-focused learning units | ❌ Can feel long if managing a full workload alongside the program |

“Those capstone projects are the reason I landed my job. Working on these projects also trained me to do final-round technical interviews where you have to set up presentations in Tableau and show your code in SQL or Python." - Joel Antolijao, Data Analyst at FanDuel.

“Springboard was a monumental help in getting me into my career as a Data Analyst. The course is a perfect blend between the analytics curriculum and career support which makes the job search upon completion much more manageable.” - Kevin Stief.

6. General Assembly

Price: \$10,000 paid in full (discounted), \$16,450 standard tuition, installment plans from \$4,112.50, and loan options including interest-bearing (6.5

Duration: 12 weeks full-time or 32 weeks part-time

Format: Live online (remote) or on-campus at GA’s physical campuses (e.g., New York, London, Singapore) when available.

Rating: 4.31/5

Key Features:

- Prep work included before the bootcamp starts

- Daily instructor and TA office hours for extra support

- Access to alumni events and workshops

- Includes a professional portfolio with real data projects

General Assembly is one of the most popular data analytics bootcamps, with thousands of graduates each year and campuses across multiple major cities, teaching the core skills needed for entry-level analyst roles.

You’ll learn SQL, Python, Excel, Tableau, and Power BI, while practicing how to clean data, identify patterns, and present insights. The lessons are structured and easy to follow, providing clear guidance as you progress through each unit.

Throughout the program, you work with real datasets and build projects that showcase your full analysis process. Instructors and TAs are available during class and office hours, so you can get support whenever you need it. Both full-time and part-time schedules include hands-on work and regular feedback.

Career support continues after graduation, offering help with resumes, LinkedIn profiles, interviews, and job-search planning. You also gain access to a large global alumni network, which can make it easier to find opportunities.

This bootcamp is a solid choice if you want a structured program and a well-known school name to feature on your portfolio.

| Pros | Cons |

|---|---|

| ✅ Strong global brand recognition | ❌ Fast pace can be tough for beginners |

| ✅ Large alumni network useful for job hunting | ❌ Some reviews mention inconsistent instructor quality |

| ✅ Good balance of theory and applied work | ❌ Career support depends on the coach you're assigned |

| ✅ Project-based structure helps build confidence | ❌ Can feel expensive compared to self-paced alternatives |

“The General Assembly course I took helped me launch my new career. My teachers were helpful and friendly. The job-seeking help after the program was paramount to my success post graduation. I highly recommend General Assembly to anyone who wants a tech career.” - Liam Willey.

“Decided to join the Data Analytics bootcamp with GA in 2022 and within a few months after completing it, I found myself in an analyst role which I could not be happier with.” - Marcus Fasan.

7. CodeOp

Price: €6,800 total with a €1,000 non-refundable downpayment; €800 discount for upfront payment; installment plans available, plus occasional partial or full scholarships.

Duration: 7 weeks full-time or 4 months part-time, plus a 3-month part-time remote residency.

Format: Live online, small cohorts, residency placement with a real organization.

Rating: 4.97/5

Key Features:

- Designed specifically for women, trans, and nonbinary learners

- Includes a guaranteed remote residency placement with a real organisation

- Option to switch into the Data Science bootcamp mid-bootcamp

- Small cohorts for closer instructor support and collaboration

- Mandatory precourse work ensures everyone starts with the same baseline

CodeOp’s Data Analytics Bootcamp teaches the main tools used in analyst roles.

You’ll work with Python, SQL, Git, and data-visualization libraries while learning how to clean data, explore patterns, and build clear dashboards. Pre-course work covers Python basics, SQL queries, and version control, so everyone starts at the same level.

A major benefit is the residency placement. After the bootcamp, you spend three months working part-time with a real organization, handling real datasets, running queries, cleaning and preparing data, building visualizations, and presenting insights. Some students may also transition into the Data Science track if instructors feel they’re ready.

Career support continues after graduation, including resume and LinkedIn guidance, interview preparation, and job-search planning. You also join a large global alumni network, making it easier to find opportunities.

This program is a good choice if you want a structured format, hands-on experience, and a respected school name on your portfolio.

| Pros | Cons |

|---|---|

| ✅ Inclusive program for women, trans, and nonbinary students | ❌ Residency is unpaid |

| ✅ Real company placement included | ❌ Limited spots because placements are tied to availability |

| ✅ Small class sizes and close support | ❌ Fast pace can be hard for beginners |

| ✅ Option to move into the Data Science track | ❌ Classes follow CET time zone |

“The school provides a great background to anyone who would like to change careers, transition into tech or just gain a new skillset. During 8 weeks we went thoroughly and deeply from the fundamentals of coding in Python to the practical use of data sciences and data analytics.” - Agata Swiniarska.

“It is a community that truly supports women++ who are transitioning to tech and even those who have already transitioned to tech.” - Maryrose Roque.

8. BrainStation

Price: Tuition isn’t listed on the official site, but CareerKarma reports it at \$3,950. BrainStation also offers scholarships, monthly installments, and employer sponsorship.

Duration: 8 weeks (one 3-hour class per week).

Format: Live online or in-person at BrainStation campuses (New York, London, Toronto, Vancouver, Miami).

Rating: 4.66/5

Key Features

- Earn the DAC™ Data Analyst Certification

- Learn from instructors who work at leading tech companies

- Take live, project-based classes each week

- Build a portfolio project for your resume

- Join a large global alumni network

BrainStation’s Data Analytics Certification gives you a structured way to learn the core skills used in analyst roles.

You’ll work with Excel, SQL, MySQL, and Tableau while learning how to collect, clean, and analyze data. Each lesson focuses on a specific part of the analytics workflow, and you practice everything through hands-on exercises.

The course is taught live by professionals from companies like Amazon, Meta, and Microsoft. You work in small groups to practice new skills and complete a portfolio project that demonstrates your full analysis process.

Career support is available through their alumni community and guidance during the course. You also earn the DAC™ certification, which is recognized by many employers and helps show proof of your practical skills.

This program is ideal for learners who want a shorter, focused course with a strong industry reputation.

| Pros | Cons |

|---|---|

| ✅ Strong live instructors with clear teaching | ❌ Fast pace can feel overwhelming |

| ✅ Great career support (resume, LinkedIn, mock interviews) | ❌ Some topics feel rushed, especially SQL |

| ✅ Hands-on portfolio project included | ❌ Pricing can be unclear and varies by location |

| ✅ Small breakout groups for practice | ❌ Not ideal if you prefer slower, self-paced learning |

| ✅ Recognized brand name and global alumni network | ❌ Workload can be heavy alongside a job |

“The highlight of my Data Analytics Course at BrainStation was working with the amazing Instructors, who were willing to go beyond the call to support the learning process.” - Nitin Goyal, Senior Business Value Analyst at Hootsuite.

“I thoroughly enjoyed this data course and equate it to learning a new language. I feel I learned the basic building blocks to help me with data analysis and now need to practice consistently to continue improving.” - Caroline Miller.

9. Le Wagon

Price: Around €7,400 for the full-time online program (pricing varies by country). Financing options include deferred payment plans, loans, and public funding, depending on location.

Duration: 2 months full-time (400 hours) or 6 months part-time.

Format: Live online or in-person on 28+ global campuses.

Rating: 4.95/5

Le Wagon’s Data Analytics Bootcamp focuses on practical skills used in real analyst roles.

You’ll learn SQL, Python, Power BI, Google Sheets, and core data visualization methods. The course starts with prep work, so you enter with the basics already in place, making the main sessions smoother and easier to follow.

Most of the training is project-based. You’ll work with real datasets, build dashboards, run analyses, and practice tasks like cleaning data, writing SQL queries, and using Python for exploration.

The course also includes “project weeks,” where you’ll apply everything you’ve learned to solve a real problem from start to finish.

Career support continues after training. Le Wagon’s team will help you refine your CV, prepare for interviews, and understand the job market in your region. You’ll also join a large global alumni community that can support networking and finding opportunities.

It’s a good choice if you want a hands-on, project-focused bootcamp that emphasizes practical experience, portfolio-building, and ongoing career support.

| Pros | Cons |

|---|---|

| ✅ Strong global network for finding jobs abroad | ❌ Very fast pace; hard for beginners to keep up |

| ✅ Learn by building real projects from start to finish | ❌ Can be expensive compared to other options |

| ✅ Good reputation, especially in Europe | ❌ Teacher quality depends on your location |

| ✅ Great for career-changers looking to start fresh | ❌ You need to be very self-motivated to succeed |

"An insane experience! The feeling of being really more comfortable technically, of being able to take part in many other projects. And above all, the feeling of truly being part of a passionate and caring community!" - Adeline Cortijos, Growth Marketing Manager at Aktio.

“I couldn’t be happier with my experience at this bootcamp. The courses were highly engaging and well-structured, striking the perfect balance between challenging content and manageable workload.” - Galaad Bastos.

10. Ironhack

Price: €8,000 tuition with a €750 deposit. Pay in 3 or 6 interest-free installments, or use a Climb Credit loan (subject to approval).

Duration: 9 weeks full-time or 24 weeks part-time

Format: Available online and on campuses in major European cities, including Amsterdam, Berlin, Paris, Barcelona, Madrid, and Lisbon.

Rating: 4.78/5

Key Features:

- 60 hours of prework, including how to use tools like ChatGPT

- Daily warm-up sessions before class

- Strong focus on long lab blocks for hands-on practice

- Active “Ironhacker for life” community

- A full Career Week dedicated to job prep

Ironhack’s Data Analytics Bootcamp teaches the core skills needed for beginner analyst roles. Before the program begins, you complete prework covering Python, MySQL, Git, statistics, and basic data concepts, so you feel prepared even if you’re new to tech.

During the bootcamp, you’ll learn Python, SQL, data cleaning, dashboards, and simple machine learning. You also practice using AI tools like ChatGPT to streamline your work. Each day includes live lessons, lab time, and small projects, giving you hands-on experience with each concept.

By the end, you’ll complete several projects and build a final portfolio piece. Career Week provides support with resumes, LinkedIn, interviews, and job search planning. You’ll also join Ironhack’s global community, which can help with networking and finding new opportunities.

It’s a good choice if you want a structured, hands-on program that balances guided instruction with practical projects and strong career support.

| Pros | Cons |

|---|---|

| ✅ Strong global campus network (Miami, Berlin, Barcelona, Paris, Lisbon, Amsterdam) | ❌ Fast pace can be tough for beginners |

| ✅ 60-hour prework helps level everyone before the bootcamp starts | ❌ Some students want deeper coverage in a few topics |

| ✅ Hands-on labs every day with clear structure | ❌ Career support results vary depending on student effort |

| ✅ Good community feel and active alumni network | ❌ Intense schedule makes it hard to balance with full-time work |

“Excellent choice to get introduced into Data Analytics. It's been only 4 weeks and the progress is exponential.” - Pepe.

“What I really value about the bootcamp is the experience you get: You meet a lot of people from all professional areas and that share the same topic such as Data Analytics. Also, all the community and staff of Ironhack really worries about how you feel with your classes and tasks and really help you get the most out of the experience.” - Josué Molina.

11. WBS Coding School

Price: €7,500 tuition with installment plans. Free if you qualify for Germany’s Bildungsgutschein funding.

Duration: 13 weeks full-time.

Format: Live online only, with daily instructor-led sessions from 9:00 to 17:30.

Rating: 4.84/5

Key Features:

- Includes PCEP certification prep

- 1-year career support after graduation

- Recorded live classes for easy review

- Daily stand-ups and full-day structure

- Backed by 40+ years of WBS TRAINING experience

WBS Coding School is a top data analytics bootcamp that teaches the core skills needed for analyst roles.

You’ll learn Python, SQL, Tableau, spreadsheets, Pandas, and Seaborn through short lessons and guided exercises. The combination of live classes and self-study videos makes the structure easy to follow.

From the start, you’ll practice real analyst tasks. You’ll write SQL queries, clean datasets with Pandas, create visualizations, build dashboards, and run simple A/B tests. You’ll also learn how to pull data from APIs and build small automated workflows.

In the final weeks, you’ll complete a capstone project that demonstrates your full workflow from data collection to actionable insights.

The program includes one year of career support, with guidance on CVs, LinkedIn profiles, interviews, and job search planning. As part of the larger WBS Training Group, you’ll also join a broad European community with strong hiring connections.

It’s a good choice if you want a structured program with hands-on projects and long-term career support, especially if you’re looking to connect with the European job market.

| Pros | Cons |

|---|---|

| ✅ Strong live-online classes with good structure | ❌ Very fast pace and can feel intense |

| ✅ Good instructors mentioned often in reviews | ❌ Teaching quality can vary by cohort |

| ✅ Real projects and a solid final capstone | ❌ Some students say support feels limited at times |

| ✅ Active community and helpful classmates | ❌ Career support could be more consistent |

| ✅ Clear workflow training with SQL, Python, and Tableau | ❌ Requires a full-time commitment that's hard to balance |

“I appreciated that I could work through the platform at my own pace and still return to it after graduating. The career coaching part was practical too — they helped me polish my resume, LinkedIn profile, and interview skills, which was valuable.” - Semira Bener.

"I can confidently say that this bootcamp has equipped me with the technical and problem-solving skills to begin my career in data analytics." - Dana Abu Asi.

12. Greenbootcamps

Price: Around \$14,000, but Greenbootcamps does not list its tuition.

Duration: 12 weeks full-time.

Format: Fully online, Monday to Friday from 9:00 to 18:00 (GMT).

Rating: 4.4/5

Key Features:

- Free laptop you keep after the program

- Includes sustainability & Green IT modules

- Certification options: Microsoft Power BI, Azure, and AWS

- Career coaching with a Europe-wide employer network

- Scholarships for students from underrepresented regions

Greenbootcamps is a 12-week online bootcamp focused on practical data analytics skills.

You’ll learn Python, databases, data modeling, dashboards, and the soft skills needed for analyst roles. The program blends theory with daily hands-on tasks and real business use cases.

A unique part of this bootcamp is the Green IT component. You’re trained on how data, energy use, and sustainability work together. This can help you stand out in companies that focus on responsible tech practices.

You also get structured career support. Career coaches help with applications, interviews, and networking. Since the school works with employers across Europe, graduates often find roles within a few months. With a free laptop and the option to join using Germany’s education voucher, it’s an accessible choice for many learners.

It’s a good fit if you want a short, practical program with sustainability-focused skills and strong career support.

| Pros | Cons |

|---|---|

| ✅ Free laptop you can keep | ❌ No public pricing listed |

| ✅ Sustainability training included | ❌ Very few verified alumni reviews online |

| ✅ Claims 9/10 job placement | ❌ Long daily schedule (9 am–6 pm) |

| ✅ Career coaching and employer network | ❌ Limited curriculum transparency |

| ✅ Scholarships for disadvantaged students |

“One of the best Bootcamps in the market they are very supportive and helpful. it was a great experience.” - Mirou.

“I was impressed by the implication of Omar. He followed my journey from my initial questioning and he supported my application going beyond the offer. He provided motivational letter and follow up emails for every step. The process can be overwhelming if the help is not provided and the right service is very important.” - Roxana Miu.

13. Developers Institute

![]()

Price: 23,000–26,000 ILS (approximately \$6,000–\$6,800 USD), depending on schedule and early-bird discounts. Tuition is paid in ILS.

Duration: 12 weeks full-time or 20 weeks part-time.

Format: Online, on-campus (Israel), or hybrid.

Rating: 4.94/5

Key Features:

- Mandatory 40-hour prep course

- AI-powered learning platform

- Optional internship with partner startups

- Online, on-campus, and hybrid formats

- Fully taught in English

Developers Institute’s Data Analytics Bootcamp is designed for beginners who want clear structure and support.

You’ll start with a 40-hour prep course covering Python basics, SQL queries, data handling, and version control, so you’re ready for the main program.

Both part-time and full-time tracks include live lessons, hands-on exercises, and peer collaboration. You’ll learn Python for analysis, SQL for databases, and tools like Tableau and Power BI for building dashboards.

A key part of the program is the internship option. Full-time students can complete a 2–3 month placement with real companies, working on actual datasets. You’ll also use Octopus, their AI-powered platform, which offers an AI tutor, automatic code checking, and personalized quizzes.

Career support begins early and continues throughout the program. You’ll get guidance on resumes, LinkedIn, interview prep, and job applications.

It’s ideal for people who want a structured, supportive program with hands-on experience and real-world practice opportunities.

| Pros | Cons |

|---|---|

| ✅ AI-powered learning platform that guides your practice | ❌ Fast pace that can be hard for beginners |

| ✅ Prep course that helps you start with the basics | ❌ Career support can feel uneven |

| ✅ Optional internship with real companies | ❌ Tuition paid in ILS, which may feel unfamiliar |

| ✅ Fully taught in English for international students | ❌ Some lessons move quickly and need extra study |

“I just finished a Data Analyst course in Developers Institute and I am really glad I chose this school. The class are super accurate, we were learning up-to date skills that employers are looking for.” - Anaïs Herbillon.

“Finished a full-time data analytics course and really enjoyed it! Doing the exercises daily helped me understand the material and build confidence. Now I’m looking forward to starting an internship and putting everything into practice. Excited for what’s next!” - Margo.

How to Choose the Right Data Analytics Bootcamp for You

Choosing the best data analytics bootcamp isn’t the same for everyone. A program that’s perfect for one person might not work well for someone else, depending on their schedule, learning style, and goals.

To help you find the right one for you, keep these tips in mind:

Tip #1: Look at the Projects You’ll Actually Build

Instead of only checking the curriculum list, look at project quality. A strong bootcamp shows examples of past student projects, not just generic “you’ll build dashboards.”

You want projects that use real datasets, include SQL, Python, and visualizations, and look like something you’d show in an interview. If the projects look too simple, your portfolio won’t stand out.

Tip #2: Check How “Job Ready” the Support Really is

Every bootcamp says they offer career help, but the level of support varies a lot. The best programs show real outcomes, have coaches who actually review your portfolio in detail, and provide mock interviews with feedback.

Some bootcamps only offer general career videos or automated resume scoring. Look for ones that give real human feedback and track student progress until you’re hired.

Tip #3: Pay Attention to the Weekly Workload

Bootcamps rarely say this clearly: the main difference between finishing strong and burning out is how realistic the weekly time requirement is.

If you work full-time, a 20-hour-per-week program might be too much. If you can commit more hours, choose a program with heavier practice because you’ll learn faster. Match the workload to your life, not the other way around.

Tip#4: See How Fast the Bootcamp Updates Content

Data analytics changes quickly. Some bootcamps don’t update their material for years.

Look for signs of recent updates, like new modules on AI, new Tableau features, or modern Python libraries. If the examples look outdated or the site shows old screenshots, the content probably is too.

Tip# 5: Check the Community, Not Just the Curriculum

A strong student community (Slack, Discord, alumni groups) is an underrated part of a good bootcamp.

Helpful communities make it easier to get unstuck, find study partners, and learn faster. Weak communities mean you’re basically studying alone.

Career Options After a Data Analytics Bootcamp

A data analytics bootcamp prepares you for several entry-level and mid-level roles.

Most graduates start in roles that focus on data cleaning, data manipulation, reporting, and simple statistical analysis. These jobs exist in tech, finance, marketing, healthcare, logistics, and many other industries.

Data Analyst

You work with R, SQL, Excel, Python, and other data analytics tools to find patterns and explain what the data means. This is the most common first role after a bootcamp.

Business Analyst

You analyze business processes, create reports, and help teams understand trends. This role focuses more on operations, KPIs, and communication with stakeholders.

Business Intelligence Analyst

You build dashboards in tools like Tableau or Power BI and turn data into clear visual insights. Business intelligence analyst is a good fit if you enjoy visualization and reporting.

Junior Data Engineer

Some graduates move toward data engineering if they enjoy working with databases, ETL pipelines, and automation. This path requires stronger technical skills but is possible with extra study.

Higher-level roles you can grow into

As you gain more experience, you can move into roles like data analytics consultant, product analyst, BI developer, or even data scientist if you continue to build skills in Python, machine learning, and model evaluation.

A bootcamp gives you the foundation. Your portfolio, projects, communication skills, and consistency will determine how far you grow in the field. Many graduates start as a data analyst or business analyst.

FAQs

Do you need math for data analytics?

You only need basic math. Simple statistics, averages, percentages, and basic probability are enough to start. You do not need calculus or advanced formulas.

How much do data analysts earn?

Entry-level salaries vary by location. In the US, new data analysts usually earn between \$60,000 and \$85,000. In Europe, salaries range from €35,000 to €55,000 depending on the country.

What is the difference between data analytics and data science?

Data analytics focuses on dashboards, SQL, Excel, and answering business questions. Data science includes machine learning, deep learning, and model building. Analytics is more beginner-friendly and faster to learn.

Is a data analyst bootcamp worth it?

It can be worth it if you want a faster way into tech and are ready to practice consistently. Bootcamps give structure, projects, career services, and a portfolio that helps with job applications.

How do bootcamps compare to a degree?

A degree in computer science takes years and focuses more on theory, while a data analytics bootcamp teaches practical skills in a shorter time. A bootcamp takes months and focuses on practical skills. For most entry-level data analyst jobs, a bootcamp plus a solid portfolio of projects is enough.